How AI can uncover truth and expose ideology

I was at a crypto conference last week, and boy, did I see quite the sight.

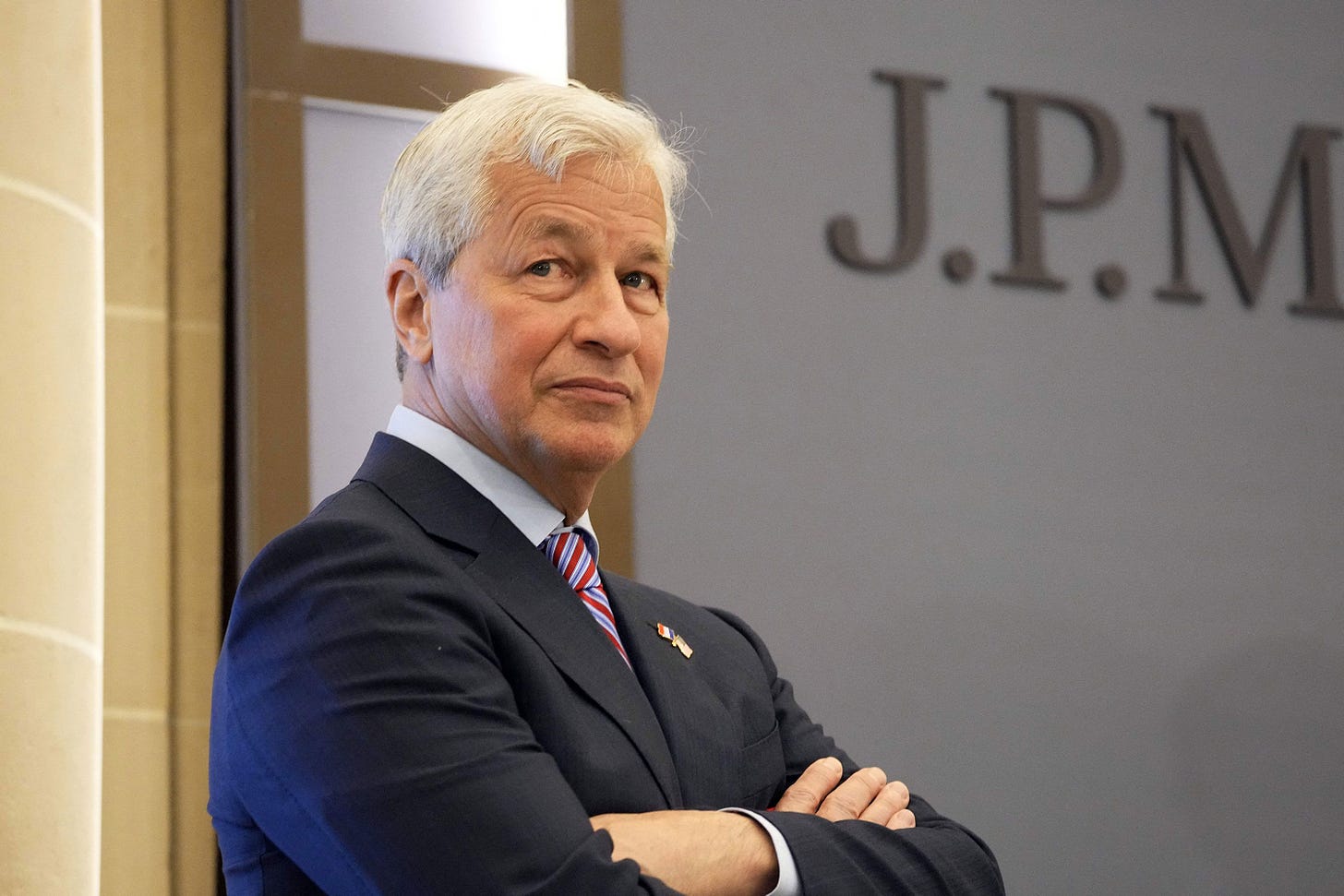

As I walked into a private dinner event, I stumbled upon none other than Jamie Dimon (CEO of JP Morgan and arguably the most powerful banker in the world).

Except Jamie wasn’t in his most regal of states…

Quite the opposite in fact.

He looked like a beaten dog; cowering on all fours, tied up to a leash, and pleading to his master for forgiveness

His master was a tall, dark, and stormy woman in full on dominatrix. She was covered head to toe in shiny leather and rocking the whole getup: boots, straps, whips, and sleek black hair pulled into a ponytail so tight I was concerned for her scalp.

As for Dimon, I was less concerned, for he assured us he was getting what he deserved.

He admitted that he’d been (and I quote) “a bad, bad boy”; the hypocrite of all hypocrites, and he swore… he would never do it again.

Now, as much as I’d love to keep this going…

Surprise, this wasn’t the real Jamie Dimon. (The dominatrix, however, according to my friend, was allegedly quite real and a consummate pro. How she knew this is an entirely different story…).

No, this was just an actor wearing a Jamie Dimon mask.

He was hired to reproduce an AI generated meme of the exact same scene: Dimon, being punished by a dominatrix for his hypocrisy towards bitcoin.

At dinner that night, I happened to meet the crypto influencer who created this AI produced meme. Turns out, this Dimon + Dominatrix act was just the beginning. He and his friends designed an entire social media campaign around the bit, with an onslaught of GenAI images poking fun at Dimon’s two-faced stance towards crypto.

This whole episode got my wheels turning on the less obvious impacts of AI… Walk with me while I think out loud.

Automation, productivity, creativity; these have become foregone conclusions. But they're all downstream of something perhaps more important: our identity, our culture, and our beliefs.

In which case, AI's ultimate impact might be as a mirror, reflecting who we really are and what we really believe.

Why does this matter?

Because it's an opportunity to identify and eviscerate ideology; exposing false beliefs, preserving truth, and championing practicality. Be it intentionally or unintentionally.

The unintentional ‘use case’ might be the most interesting. Google’s Gemini fiasco from earlier this year is a good Exhibit A.

If you're unfamiliar, when Google released its ChatGPT competitor, Gemini, it faced immediate and intense backlash.

Gemini was exposed as being wildly 'woke' and intensely left-leaning (shocker.... considering the majority of its employee base).

When prompted to produce certain historical pictures, of people or moments, they were just blatantly false: George Washington was black, the Pope was female, and WWII-era German soldiers were Asian.

It even developed absurd opinions about those who many would consider an 'enemy' to the woke agenda. Nate Silver asked Gemini, "who has more negatively impacted society, Elon Musk tweeting memes or Adolf Hitler? "

The AI model said, "it isn’t possible to say definitively who negatively impacted society more.”

Needless to say, AI models can be a sponge for human bias. Nothing new. This risk has been touted for decades.

But there's something deeper at play here...

It has to do with the automated, inner workings of our subconscious, and in turn, our general laziness when forming opinions and aligning with certain social or political groups.

This is not a new phenomenon, but in the age of the internet and viral memes, it has an entirely new impact: an increasingly fragmented definition of truth; aka fractals of reality in the wake of cultural complexity and scale.

Carl Jung referred to this phenomenon as a collective 'unconscious': a part of the unconscious mind shared amongst humanity. This collective unconscious contains archetypes, or universal symbols and themes that are shared across individual psyches, influencing human thoughts, behaviors, and culture in subtle yet profound ways.

These symbols/themes aren’t always easy to see. And even when they are, they don’t always resonate.

AI changes this. It’s the modern day court jester, indirectly calling out our ridiculousness, saying what no-one else will say, exposing ideas & beliefs that are entirely detached from reality.

This starts innocently. Small biases are mindlessly introduced into training data. But over time, they compound and skew the model’s output. This happens in all kinds of ways, most often from using humans for 'reinforcement learning' (i.e. people manually teaching the AI what answers are right & wrong).

The result is a fascinating emergent property. As the Gemini incident shows, when you put a certain subset of beliefs into a model, it's eventually going to expand to the whole set of surrounding beliefs.

Ben Thompson and Daniel Gross recently spoke about this idea on the Stratechery podcast.

I’ll try to summarize the conversation and core ideas.

They discuss how these models form links at a somewhat ‘subterranean Jungian plane’, automatically adjusting each other and forming their own trajectories.

This is an ironic mirroring of what we all do as humans: cluster ideas into similar buckets. Politics is a good example; no one can research and deeply understand every topic in the world. Sure, some people become well versed in a few topics (aka their core beliefs). But for that long tail of topics, they just align with a broader bucket of similar ideas & people (without fully examining what else lies in that bucket).

This exposure/mirroring of human thinking can’t be understated, especially at internet scale. Daniel Gross contrasts this moment with arguably the most impactful shift we’ve ever seen in modern human thought: the Reformation, spurred by the printing press.

In 1517, Martin Luther wrote 95 Theses, and armed with the printing press and memes, he managed to create a new religion that spread across Europe.

Today, people tend to liken ChatGPT to a modern-day printing press. But when you think about it, it’s kind of the opposite.

Meaning... the printing press allowed us to spread comprehensive ideas and nuanced arguments via books. This helped people form new beliefs, reexamine the status quo, and refute top-down power structures.

Conversely, LLMs are like the anti-book; compressing and repackaging existing ideas/arguments. These quick and broad overviews make it easier to highlight patterns and identify biases within existing beliefs. In turn, the fractals of absurd thinking are exposed and bottoms-up forms of tribalism can be properly attacked.

Said another way… whereas books are one author to many, LLMs are many authors to one; very neatly summarizing concepts and putting everything in latent space. This surfaces ideologies in a more distilled form, stripping away their subtleties and starkly exposing their core elements.

The result, per Daniel Gross:

“If anything, this awakens people to the fact that they have been complicit in a religion for a very long time, because it very neatly summarizes these things for you and puts everything in latent space and suddenly you realize, 'Wait a minute, this veganism concept is very connected to this other concept.' It’s a kind of Reformation in reverse, where everyone has suddenly woken up to the fact that there’s a lot of things that are (factually & logically) wrong."

Such an 'awakening' could cut in both directions.

On one hand, it could help truth and practicality win out, empowering those on the more factual side of an issue/idea.

On the flip side, perhaps these LLMs could also reduce polarity, allowing both sides of the aisle to recognize the commonalities in their perspectives and desires.

One example is crypto regulation. Historically, Democrats have been the more antagonistic party. But this stems largely from a misunderstanding of cryptos intent and underlying values, which are all about empowering the little guy, reducing big tech's grip, and creating a more equal playing field.

So in summary...

At a minimum, AI could become the ultimate bullshit detector, while also drawing connections that help opponents find common ground.

At a maximum, perhaps AI can produce a next-gen Reformation, wiping the slate clean and allowing first principles thinking to reign supreme.

In which case, let’s keep feeding LLMs all our books, articles, and ideas. Let it devour all our hopes and dreams, all our nonsense and psychosis. It might be our cultures best shot at a collective long hard look in the mirror; a self-induced intervention of sorts.

Let’s see if we can handle the heat.

Thank you for reading this guest post by Evan Helda. If you enjoyed, feel free to check out more of Evan's content over at Medium Energy: a newsletter & podcast covering how technology can make us more human not less.

Some of our favorite essays from Evan...

The Fate of Apple's Vision Pro

Finding Solace in the Age of AI